MITRE published a fresh set of EDR evaluation results! This time by emulating APT29 against a significantly larger group of twenty-one Endpoint Detection and Response (EDR) vendors. Using the raw data from MITRE and some analysis in Splunk, it is possible to get an overview of detection performance across vendors, which is challenging to get from the MITRE webpage.

Hilariously and like the last time, numerous vendors immediately self-congratulated or otherwise engaged in using words like leading, leader, or leadership. MITRE does not assign scores, rankings, or ratings. A small sample:

- "F-Secure excels again [...]" (source)

- "[...] demonstrates FireEye [...] leadership [...]" (source)

- "[...] solidify Cortex XDR as a leader in EDR" (source)

- "[...] SentinelOne Leading in EDR Performance" (source)

The charts below are generated by Splunking the JSON files from the MITRE webpage after preprocessing them using a Python script to onboard APT29 EDR evaluations into Splunk.

You may also like to read part one covering APT3 here

Leaders per detection category

MITRE defines six main detection types for each of the one hundred and forty steps from APT29.

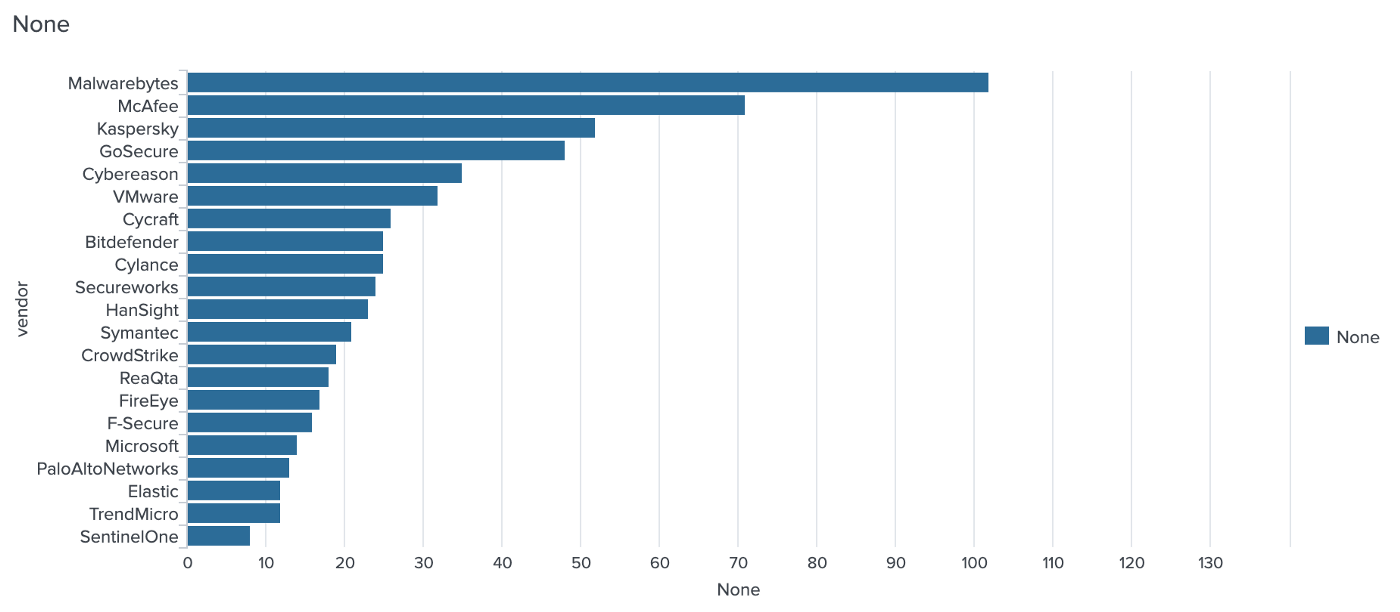

Which EDR detects the least?

Let’s start by looking at the leader of detection fails. Longer bars are worse:

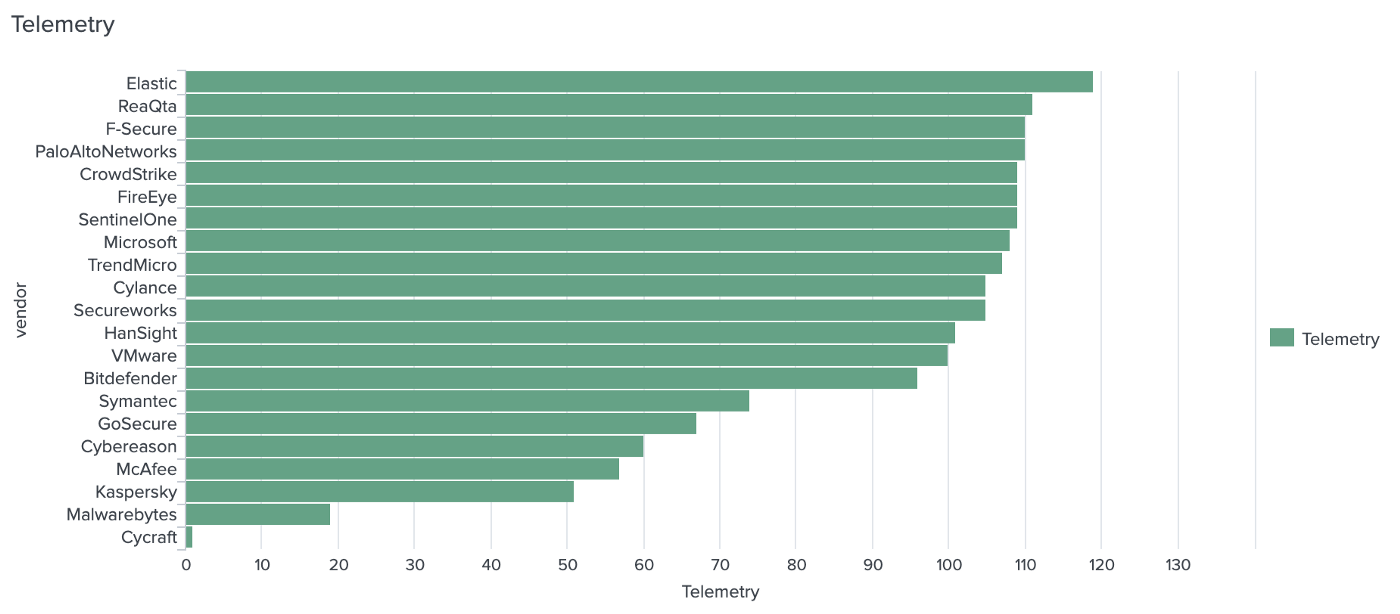

Which EDR vendor logs how many APT29 steps?

Longer bars are better:

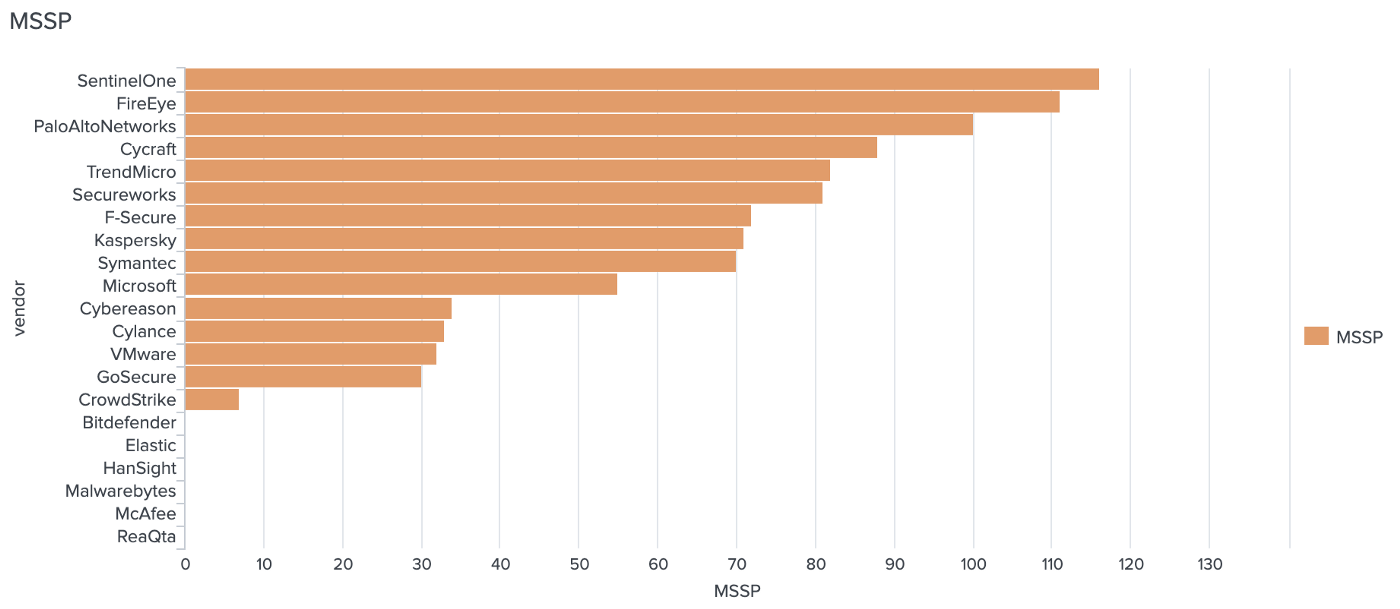

How did the human-powered managed service score?

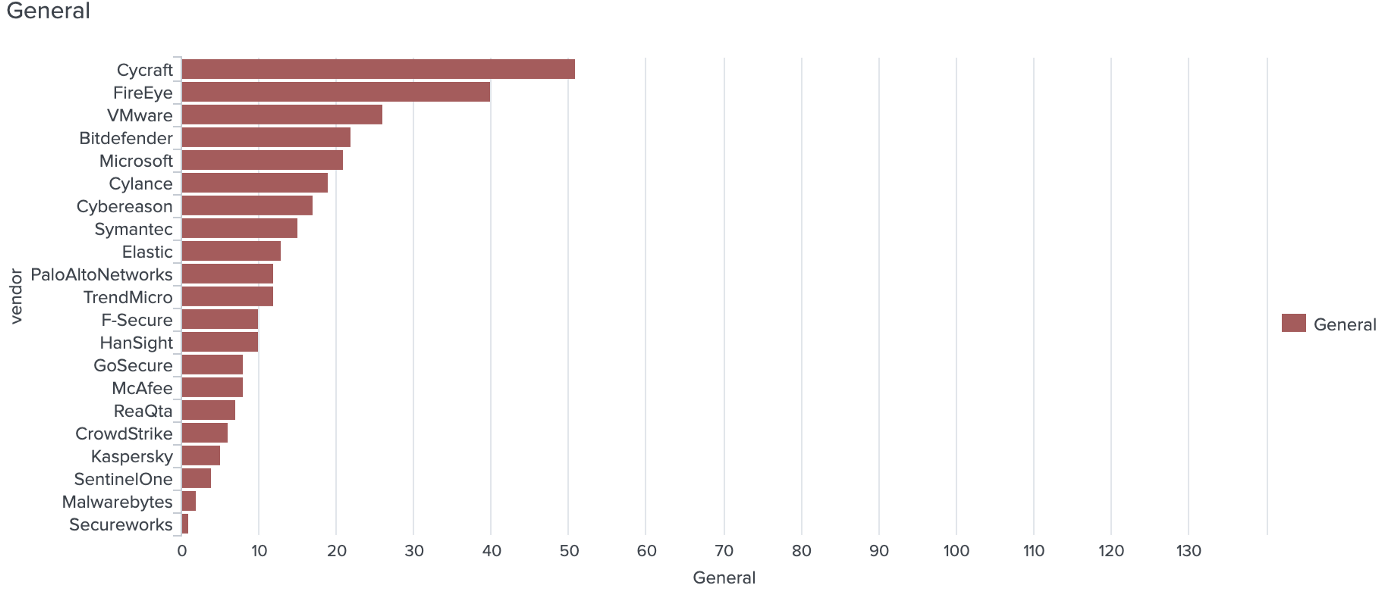

Which EDR detects how many APT29 steps as suspicious?

In this case, the detection by the EDRs is a lot more specific than telemetry, but less specific than tactic or technique:

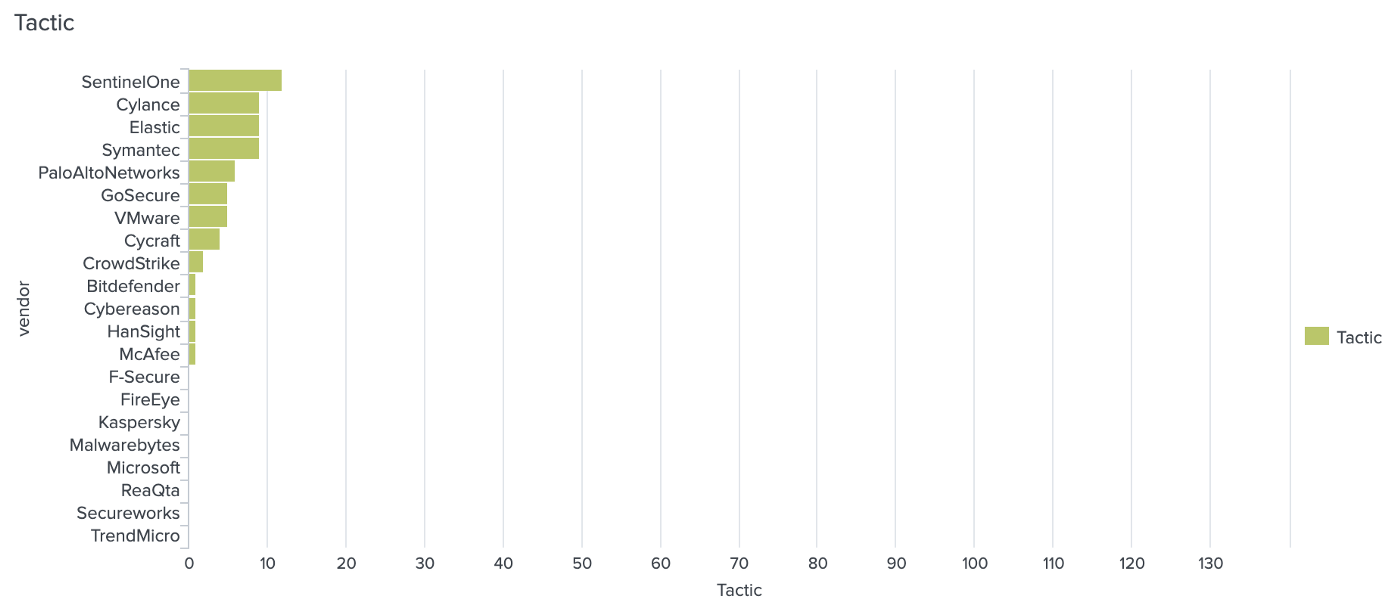

Which EDR detects how many APT29 step tactics?

Here the detection by the EDRs included the tactic used by APT29:

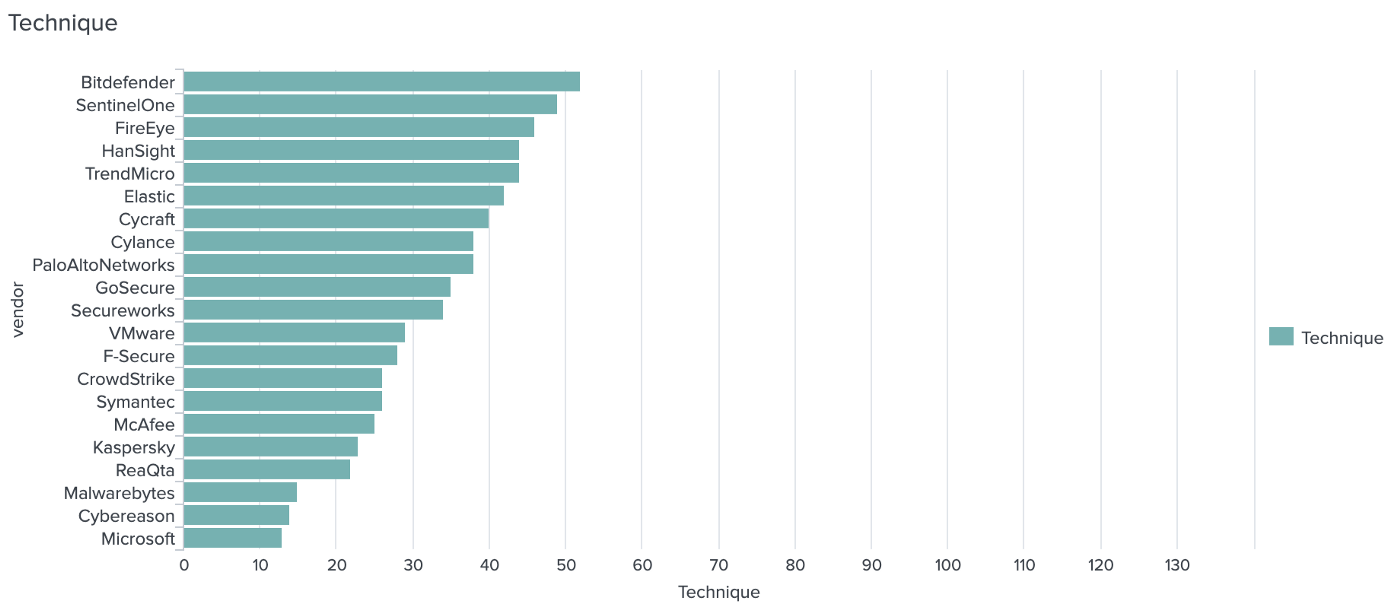

Which EDR detects how many APT29 step techniques?

Finally, the most specific detection category: which APT29 step techniques were detected by the EDRs:

Leaders per detection category

Other dimensions were evaluated by MITRE too, some of which you may or may not care about.

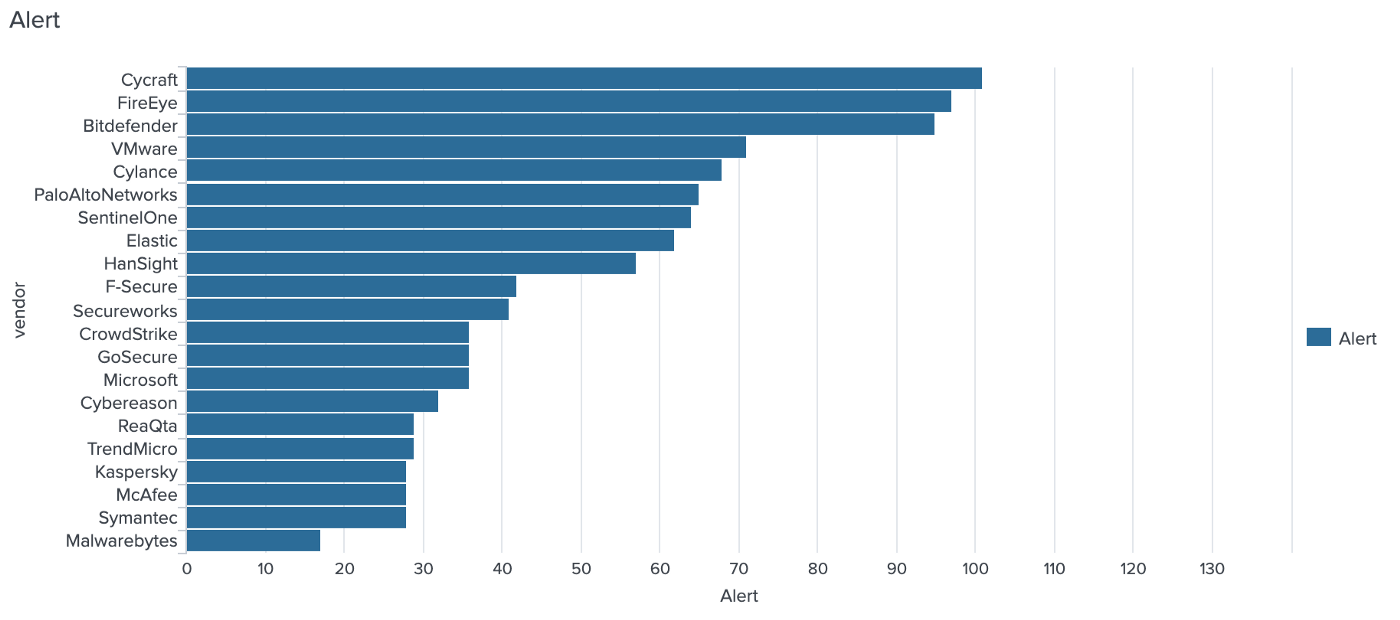

Which EDR alerts the most on APT29 steps?

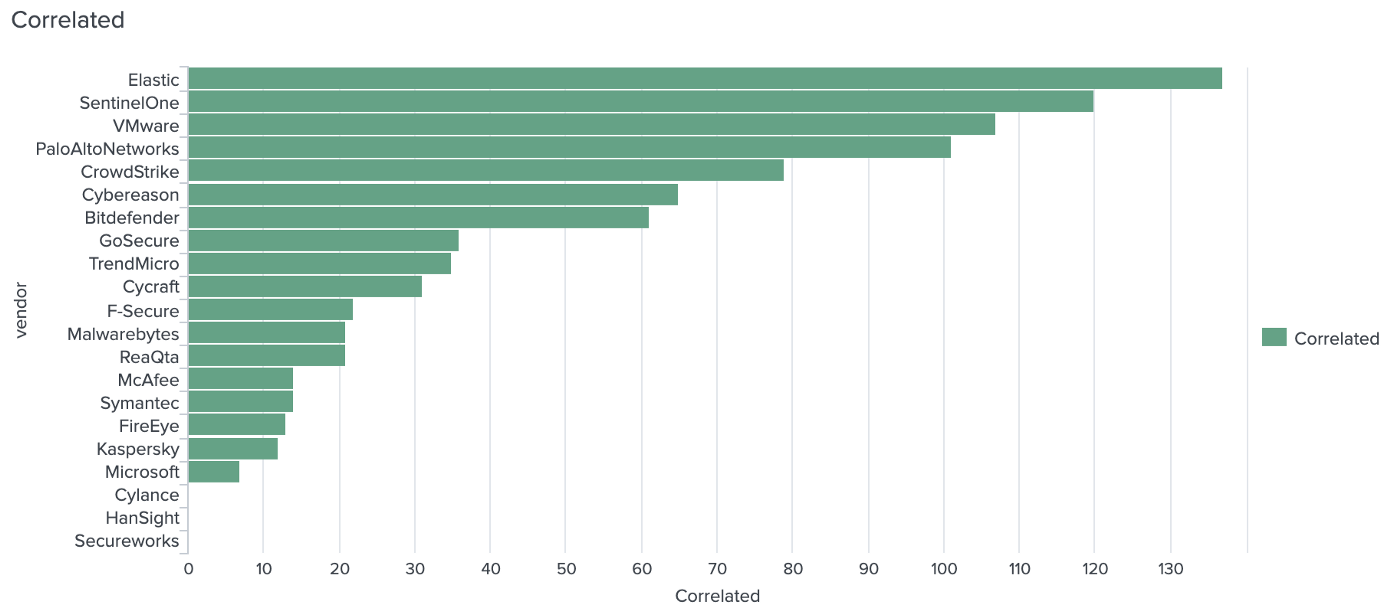

Which EDR shows the most event lineage for APT29 steps?

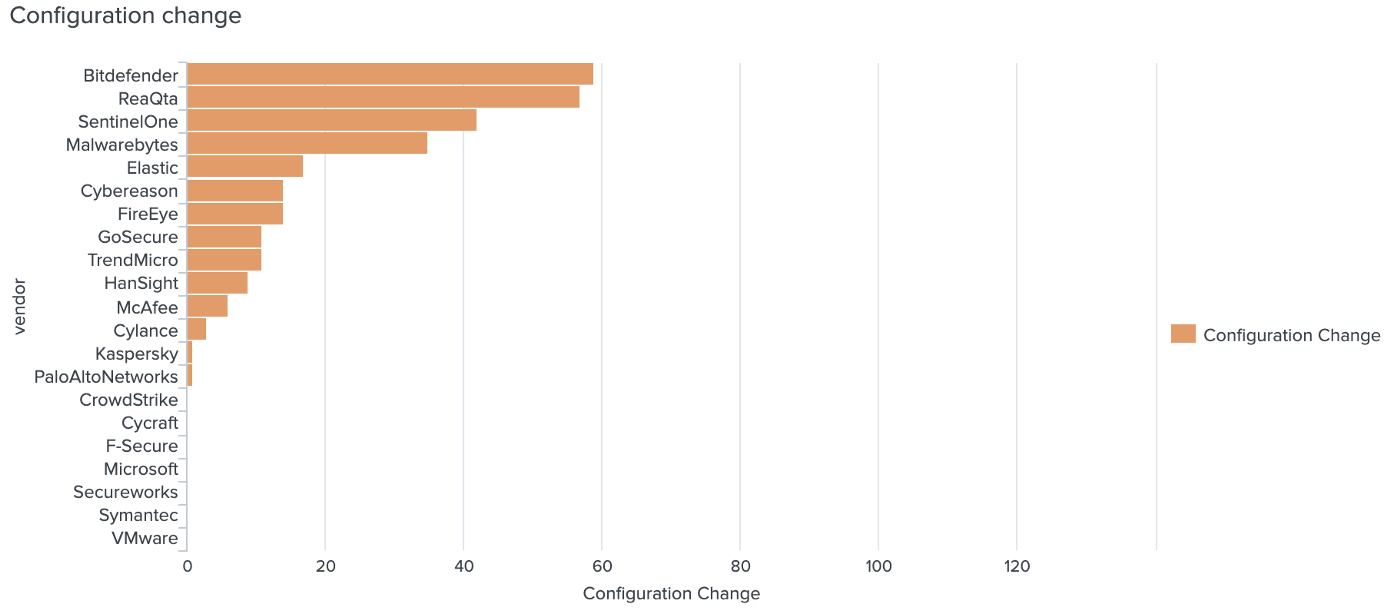

Who needed the most configuration changes to make APT29 steps visible?

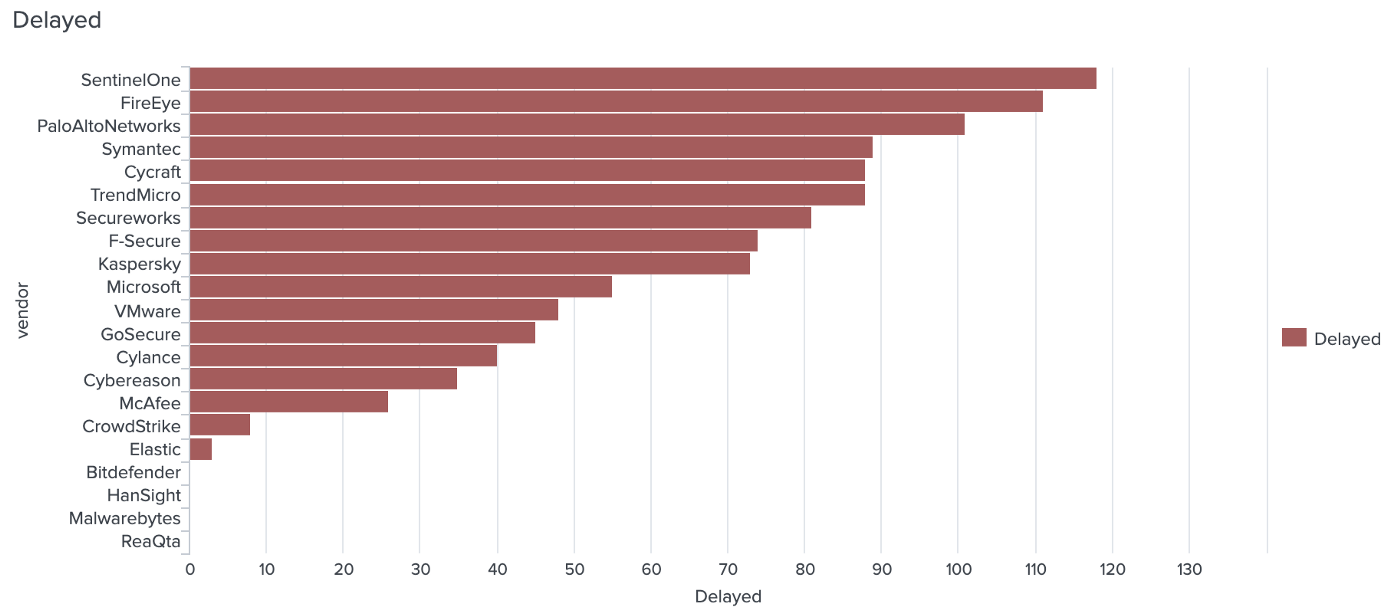

Who had the least realtime alerting for APT29 steps?

Who detected APT29 steps but only after manually pulling endpoint data?

Which are the least detected APT29 steps?

Interestingly for blue teamers, this data can also be used to ask questions like: Which steps from APT29 were least likely to bedetected by EDR vendors?

- Step 1.A.4 "Used RC4 stream cipher to encrypt C2 (192.168.0.5) traffic" went undetetected by 100% of EDR vendors

- Step 10.B.2 "Executed PowerShell payload via the CreateProcessWithToken API" went undetected by 17 of the 21 vendors

- Step 17.B.1 "Read and collected a local file using PowerShell" went undetected by 17 of the 21 vendors

- Step 6.A.2 "Executed the CryptUnprotectedData API call to decrypt Chrome passwords" went undetected by 17 of 21 vendors

- Step 10.B.1 "Executed LNK payload (hostui.lnk) in Startup Folder on user login" went undetected by 16 of 21 vendors.

I’ve used this Splunk search to create the list above:

index=main sourcetype="attackeval:json" source="*APT29*" Detection{}="None"

| eval OperationalFlow=trim(Step) . " - " . trim(Procedure)

| stats count by OperationalFlow

| sort - count

Which are the most detected APT29 steps?

Conversely, these steps below are detected by most EDR vendors:

- Step 14.B.4 "Dumped plaintext credentials using Mimikatz (m.exe)" was detected by 19 out of 21.

- Step 16.D.2 "Dumped the KRBTGT hash on the domain controller host NewYork (10.0.0.4) using Mimikatz (m.exe)" was detected by 19 out of 21.

- Step 11.A.11 "Established Registry Run key persistence using PowerShell" was detected by 18 out of 21.

- Step 20.B.3 "Added a new user to the remote host Scranton (10.0.1.4) using net.exe" was detected by 18 out of 21.

- Step 1.A.2 "Used unicode right-to-left override (RTLO) character to obfuscate file name rcs.3aka3.doc (originally cod.3aka.scr)" was detected by 16 out of 21.

DIY

I’ve updated the Splunk app on Splunkbase so you can play with the data and do your own analysis.

- Download Splunk for free (Windows, MacOS, Linux)

- Download and install the EDR evaluations app from Splunkbase or Github to play with the data yourself.

Drop me a line if you did do DIY and found something interesting!